Powering The Quiet Hardware Revolution

LLM's have become the ultimate debate on what potential they could have,.. just like speculating the performance of baseball stars entering the World Series - everybody wants to have a piece of the action, & hardware companies are the ultimate hustlers for getting club level seats.

Companies like Nvidia, Intel, AMD did not start with AI…which is why they seem to have the upper hand over newer silicon hardware builders (more on that later). These three actually started with a focus on IC's, gaming, cpu's & memory. In this article I'll talk about what each of these (and a few newer competitors) have accomplished so far with respect to AI, and how they stand-out from each other.

When Nvidia was launched in 1993, the internet was still in its early stages & the founders had a vision to develop top of line graphics-based systems for computer resolution, with a business model focusing on the video game market. The GeForce 256 was the company's first breakthrough in establishing a reputation for building GPU's, and they have been leading the field of rendering & 3d acceleration since then. Eventually Huang and his engineers realized their edge with respect to parallel computing,..by the time they introduced CUDA (Compute Unified Device Architecture) businesses & the general public had already caught on with multi-core's processing capabilities and were using Nvidia's products as the go-to hardware for playing with big datasets & building complex algorithms.

When r&d in machine learning took off in the mid-2010's, they introduced tensor cores in their GPU's - which vastly improved computation of matrices for applications using deep learning. In 2020 they introduced the A100, following in 2022 by an upgraded memory accelerator in the H100. The combination of Sk hynix' timing in implementing high bandwidth HBM3 & having a custom software platform (CUDA) designed for using such complex hardware is what led Nvidia to being valued over a trillion dollars in such a short timeframe.

Let's analyze how other underdog companies compete with that giant…

Intel is one of the very first CPU companies in America, and their introduction of the commercial microprocessor in the early 70's launched the concept of personal computing…however, let's talk about AMD first. AMD (Advanced Micro Devices) was originally a direct competitor to Intel with similar lines of hardware. They decided to take a shot at the GPU market & acquired the graphics card company ATI Technologies in 2006.

AMD innovated a lot in bandwidth technology in their early stage of GPU production, they gained a reputation for building cost-efficient hardware (especially with their Radeon Instinct MI series). They have their own 'version of CUDA' called the ROCm (Radeon Open Compute), but consumers are slow to catch on with their software platform and that makes them less competitive in that regard.

To fix that, they recently acquired European based Silo AI for roughly $665 million, a software company specializing in large model AI training. To improve their hardware design & infrastructure, they bought New Jersey based ZT Systems for $4.9 billion. AMD's open source commitment will perhaps attract new developers, in circles like academia, however for now they face an uphill battle in levelling up with CUDA.

Back to Intel, let's first acknowledge that the world of personal computers wouldn't have happened without x86' ISA. Now because they considered themselves as leaders of microprocessor manufacturing since the creation of the 8086 chip, they've put a lot of focus and almost solely invested their resources and r&d into CPU's.

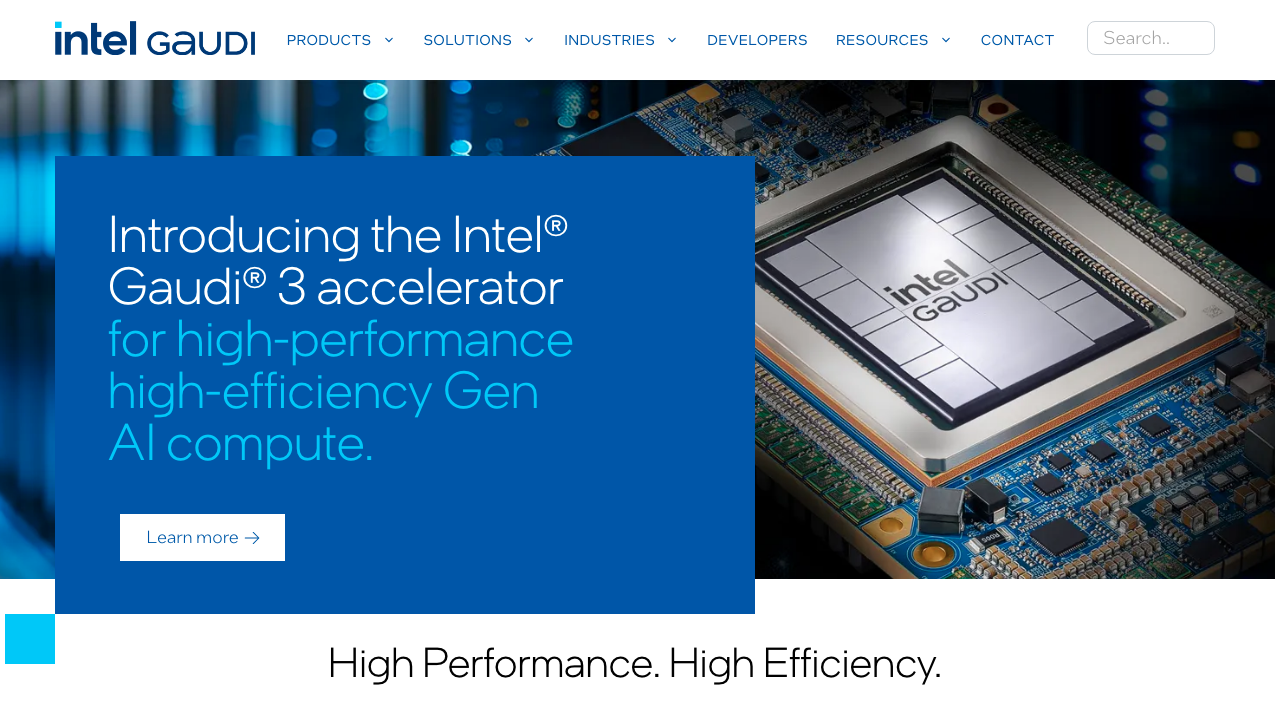

Intel is like a veteran fashion mogul that adopts a radical style once in a while to look cool with the newest trend. They acquired Habana Labs for $2 billion in 2019 just for that reason, not wanting to be left behind in AI. They pulled off a competitive AI processor called the Gaudi 3, capable of doing substantial matrix operations and thus directly competing with Nvidia's tensor core technology.

Intel's software platform for hardware management is called OneAPI, and distinguishes itself by offering an array of programming features aside from just GPU's (which makes them stand out as offering flexibility to customers).

Despite their effort to stay in the AI game, Intel will most likely continue focusing mainly on their x86 products. It's not clear if their engineering team is still committed to launching the rumored Falcon Shores chip in 2025. Transitioning to an all out AI hardware company to secure market share is not so easy, considering how far ahead companies like Nvidia and AMD already are.

Similar to Intel, Groq is an AI company that focuses mainly on inference. Groq is a chip manufacturer founded in 2016 by ex-Google engineer Jonathan Ross. They obviously stand out with their memory-driven architecture and because of that niche focus, they have an edge over manufacturing costs. This is why they are popular amongst freelancers when it comes to running Meta's models.

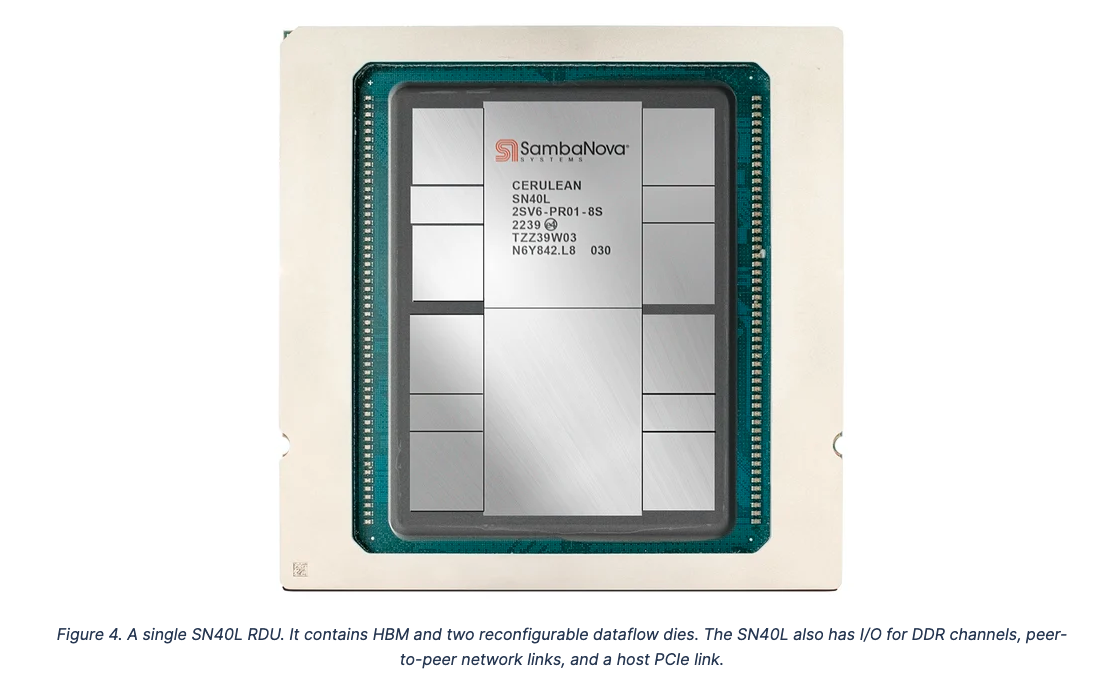

SambaNova Systems is a very progressive Palto Alto based AI hardware builder that is gaining popularity. It was founded in 2017 by former Sun Microsystems VP electrical engineer Rodrigo Liang, & a team of Standford researchers. This company is unique because of how versatile their hardware is - their lead product: SN40L, has a reconfigurable dataflow architecture.

SambaNova is like the ultimate yoga guru master, advocating for superior flexibility while asking for tougher challenges. Because of the superior optimization design (of data pathways) they are able to compute large scale models. Their flagship software platform for hardware configuration is called the SambaFlow.

Another progressive company that thinks outside the box is Cerebras. They decided to go big from the get-go by building chips that are much bigger in size than the conventional norm, offering highly custom-designed systems for complex & specialized r&d use cases. Their engineering is revolutionizing the way companies think about inference efficiency - integrating computation & memory on the same platform (by etching a ton of cores on one wafer). It's like they are setting the stage of AI for our entire Galaxy.

The company was founded in 2016 by Andrew Feldman, and they got famous when they presented the biggest chip ever built; the Wafer Scale Engine (WSE). If you think OpenAI's models are huge, well Cerebras' chips are able to train models many times bigger, & that's why most of their clients are government agencies & institutions like the Mayo Clinic.

Last but not least - Qualcomm. This chip company is well established in the internet of Things market. We know it for powering a lot of smartphones with their Snapdragon processors. Qualcomm has a big footprint in edge computing, and are setting their sights on the automotive industry, sectors like autonomous driving.

To conclude, it's safe to say that Nvidia is at the top of the hill. Reaping their efforts for innovating in technology like the GPU & CUDA ecosystem. That being said let's not forget that AMD is an avid learner & wise to partner with solid companies to leverage their engineering teams. As for the rest of the 'underdogs', in my opinion each of these companies have a chance to have breakthroughs in designing new blueprints, syncing software for their UI, & discovering new materials & alloys, as well as collaborating with data centers for energy efficiency.