LLMs & The Compute Dilemma

Despite our neuroplasticity & ability to learn, our brains transfer thought information in a very limited way, just a few bits per second. Its also part of the reason why, it took us Homo sapiens a few hundred thousand years to learn how to adapt & survive efficiently.

LLMs became a consumer technological breakthrough because they are able to closely emulate human brain function (& reason faster). Writing code, classifying text, summarize... are just a few examples of what they can handle.

2024 offered us a buffet of conflicting signals; from ambition, fear, existentialism, to wizardry.

Google is so pumped up...they even decided to give their platform an astrological sign (Gemini) and while large companies (despite a consensus for alignment) are constantly rushing to benchmark their models, it's hard to speculate what scaling potential lies ahead. What is factual though, is that these models can amplify intelligence the wrong way, & misdirect consumers (like some high schoolers quickly found out).

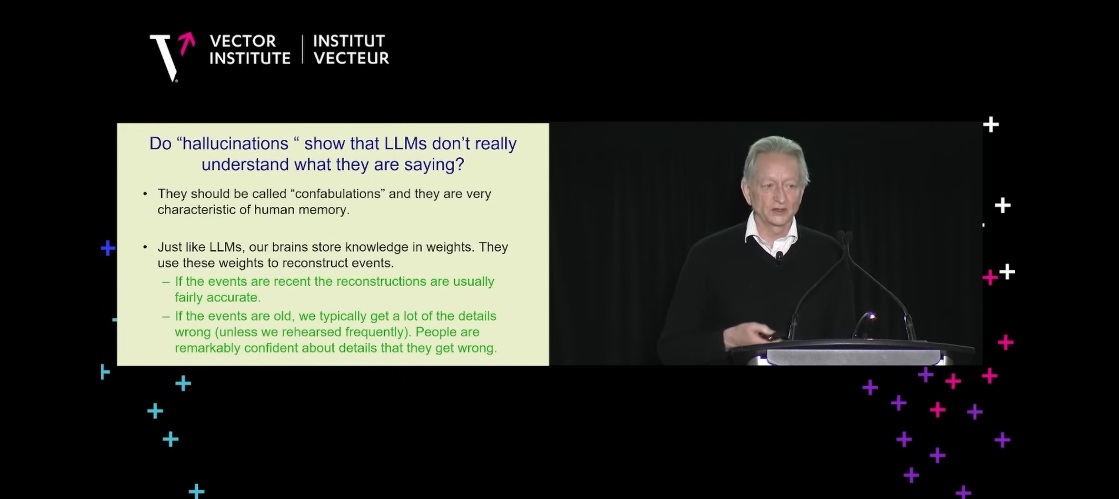

Dr.Hinton, one of the top experts in the field, gave us an example on taking something for granted by reminiscing (in a talk he gave recently) how in 2006; a conference organizer rejected his time slot for a lecture on AI, because another academic had chosen to present a talk on Deep Learning already.

Go figure - it's like telling Richard Pryor to stop joking about social norms because you've had enough of it.

Last year, Dr. Hinton emphasized at several conferences that AI isn't immune from recklessnes.., meanwhile a strict California regulation bill focusing on constraint gets derailed & vetoed (Governor Newsom however stated that he did not want to "thwart the promise of the technology" to advance the public good).

Neural networks have been applied in language research since the 1980's but a lot of academics (unlike Dr.Hinton who was very intrigued) treated them with skepticism.

In the 90's researchers kept focusing on improving math, refining, & optimizing learning algorithms.

As the dot-com boom cooled in the 2000's, it became clearer which company had a promising future with respect to their niche. Jensen Huang for instance, had a feeling that GPUs were going to revolutionize hardware & had a strong foresight for demand of powerful scientific computing hardware (which inspired the development of CUDA).

After Standford professor Fei-Fei Li facilitated deep learning testing with the Imagenet platform in 2009, a team of grad students at the University of Toronto came up with the Alexnet CNN model - winning the annual vision challenge (ILSVRC) in 2012.

Designed initially for low-mid level programming, the CUDA platform eventually shifted its focus on deep learning - in part inspired by Imagenet & the Alexnet image classification milestone - which set a new precedent for leveraging data with massive amounts of parameters & using GPUs for deep learning training.

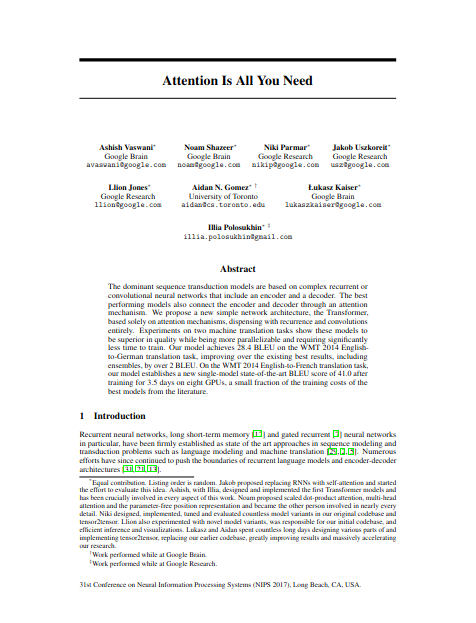

The number of teams participating at the annual vision challenge kept growing until the Imagenet benchmark was outgrown around 2017. Which is when an academic paper published by a team of Google engineers called "Attention Is All You Need" essentially democratized AI, and paved with the way for building large scale applications with the Transformer architecture.

The architecture destabalized the conventional way of processing tokens sequentially (step-by-step) and made computation more efficient (& much less expensive) by processing sequences of data all at once. This essentially pushed the limits of CNNs with the development of vision transformers.

Despite the argument for leveraging human intelligene, LLM's have ignited numerous moral debates. Experts like Dr.Hinton associate open-sourcing to selling missile blueprints at the corner store, while Andrew Ng (computer scientist & co-founder of Google Brain) on the other hand, argues that in order to establish an equal level playing field for everyone, open-sourcing (e.g. Llama models) with strong guardrails and an emphasis on collaboration is the way to go.

At the CES 2025, Nvidia showcased Nemotron & Cosmos Nemotron. Built on Meta's Llama models, these platforms were introduced as industry grade platforms to scale AI agents.

Nemotron is an LLM designed to help organizations with optimizing tasks like customer service & automation.., while the Nemotron Cosmos is geared for visual data processing (e.g. healthcare, sports analysis).

However, the real world challenge isn't in marketing more AI agent platforms. Operating costs of AI are much higher than usual software (as you've probably noticed). The more tokens are burned through your computational demands, the more processes (e.g inferences) are triggered, the higher the watt usage is consumed by your hardware & data centers.

Undoubtedly Nvidia will have a lead on a lot of neural network frameworks (for a long time) & their close partnership with META will make both companies powerhouses in hardware r&d.

Coincidently, META is currently supporting r&d on a new transformer called Byte Late Transformer (BLT). The idea is to be able to compute even smaller lumps of data than regular tokens...you've guessed it - byte sized tokens. This allows for more precision in selecting quality but espcially raw data.

This is where the hurdle only begins. AGI is still on the run and that puts manufacturers in a tough position with rapidly changing designs, where at some point we might face supply chain issues. Combined with the murky waters of geopolitics - we will be forced to find better & more sustainable inferencing techniques. Neuromorphic architecture & quantum computing are amongst the leading topics of that context...but will requires immense government investment and policy development for prioritizing compute needs.

At the moment GenAI platforms like ChatGPT, Midjourney etc. face important obstacles in deep learning, like sustaining 'semantic coherence'.

Techniques like Retrieval Augmented Generation (RAG) are developed so that LLMs can leverage vector data banks (& improve contextual reasoning), while Spatial Intelligence r&d (like Fei-Fei-Li's World Labs) is aimed at developing systems for image depth perception & extending environmental acumen.

To conclude, it's important to realize the challenge of integrating AI assistants with humans doesn't only remain in power consumption.

In the field of robotics for example, Moravec's paradox has been a paramount subject for decades. Bridging various ML techniques and integrating them with sensors will take time, in order to at least match the sensorimotor skills humans have carved in their cerebrums through evolution (over thousands and millions of years).

Progressively welcoming AI into society (on a large scale) will require a lot of structural innovation for integrating multi-modal data input sytems.